Robin Hanson gave a very interesting TED talk about a possible technological singularity based on AI and robotics. I outline his talk below and add a few ideas from Hanson’s 1998 article “Long-Term Growth As A Sequence of Exponential Modes“, Ray Kurzweil’s essay (2001) and book (2005), and Bill Joy’s Wired article (2000).

Notes

Hanson’s “Great Eras” graph illustrates major economic revolutions and their effect on growth rates on a log-log scale. Humans doubled their population about every 200,000 years until the agricultural age started 10,000 years ago.1 In the agricultural age, human population and production doubled every 1000 years. Then the industrial revolution occurred and our world economy started doubling every 15 years. Both revolutions increased the growth rate by a factor of 50 to 200. (Kurzweil extends this pattern of revolutions into both the past and the future.)

What if a third major economic revolution occurred? How could it occur?

Robots.

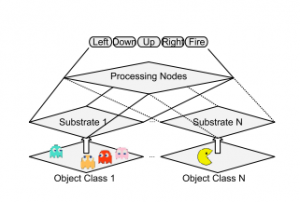

For the past 10,000 years, human economic growth has been based on land, capital, and labor. For the past 200 years we have increased world productivity by population growth, education, and by creating bigger/better tools. Intelligent robots could reproduce more quickly than us, transfer knowledge faster, and they would be their own tools. Robots or artificial intelligences would be so extremely productive that we could experience an economy where our production doubled every month. The amount of stories, art, television, games, science, philosophy, and legal opinions produced would be phenomenal.

Hansen suggest a path to a humanistic robotic world created by this third economic revolution. Artificial intelligence might first be created by mapping the human brain and up-loading human brains into computer hardware. The most productive human individual minds uploaded into computers would proliferate the fastest and thus would dominate. They would be immortal and they would be able to travel across the world quickly and cheaply. Humans would not be able to compete and perhaps we would all retire. The uploaded human minds would live in a beautiful virtual reality.

Hansen describes a society of virtual minds. The most productive minds would acquire or create the most computer resources. Their software personality emulations would run the fastest relative to real-time. He speculates about the structure of this virtual society which includes a caste system, their working habits, and the virtual worlds that the different castes would inhabit.

1This estimate is from “Long-Term Growth As A Sequence of Exponential Modes” and is based on “Population Bottlenecks and Pleistocene Human Evolution” Hawks et al. (2000) and “On the number of members of the genus Homo who have ever lived, and some evolutionary implications” Weiss (1984).