I’ve been reading “Category Theory for Programmers” which was suggested to me by Mark Ettinger. This book presents many examples in C++ and Haskell. It teaches you some Haskell as you read the book. It uses almost zero upper level mathematics and it skips almost all of the mathematical formalities. If you decide that you want to read it, then you might want to read the first six chapters of “Learn You a Haskell for Great Good!” and write a few small Haskell programs first. (I also would suggest trying to solve the first three problems in Project Euler https://projecteuler.net/archives using Haskell.)

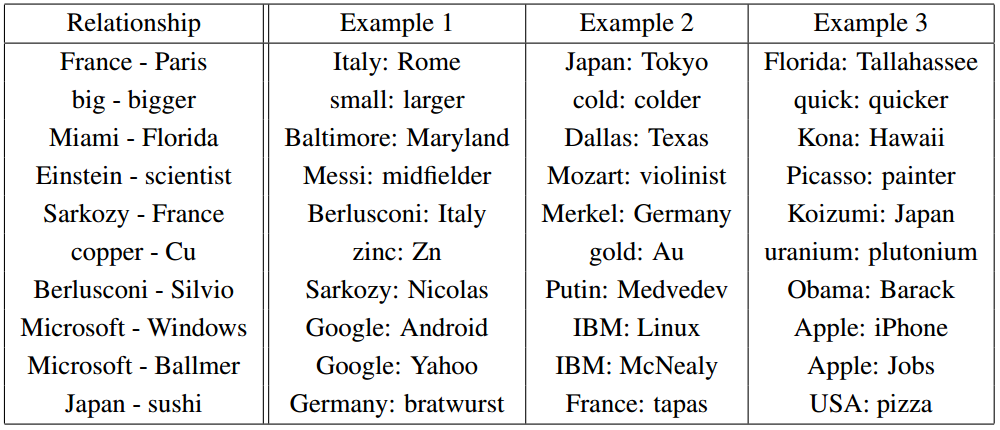

Interestingly, in category theory, $A*(B+C) = A*B + A*C$ can be translated into the following theorems :

- A*(B+C) = A*B + A*C is true for all positive integers A,B, and C,

- max(A, min(B,C)) = min( max(A,B), max(A,C)) for all real numbers A, B, and C,

- lcm(A, gcd(B,C)) = gcd( lcm(A,B), lcm(A,C) ) where lcm means least common multiple and gcd means greatest common denominator, and

- intersection(A, union(B,C)) = union( intersection(A,B), intersection(A, C)).

Unprotect[C];

test[ funcRandChoose_, prod_, sum_, i_] := Tally[ Table[

A = funcRandChoose[];

B = funcRandChoose[];

C = funcRandChoose[];

prod[ A, sum[B, C]] == sum[ prod[A, B] , prod[A, C]],

{i}]];

test[ RandomInteger[{1, 1000}] &, Times, Plus, 100]

test[ RandomInteger[{-1000, 1000}] &, Max, Min, 100]

test[ RandomInteger[{-1000, 1000}] &, LCM, GCD, 100]

test[ RandomSample[ Subsets[ Range[5]]] &, Intersection, Union, 100]