The other day, Carl mentioned that Euclidean Geometry was decidable. I thought that was impossible because I thought it would have an isomorphic copy of Peano arithmetic which is not decidable. Later he pointed me toward Tarski’s axioms. Here’s a quote from the Wikipedia page “This fact allowed Tarski to prove that Euclidean geometry is decidable: there exists an algorithm which can determine the truth or falsity of any sentence.” I found the whole article to be pretty cool because I had never really dug into geometry as a first order predicate calculus.

Mathematician and Father. Into games, astronomy, psychology and philosophy.

The article “Big Data and the Hourly Workforce” (see Slashdot comments) repeats the known story that Big Data is being used by companies to improve their product. It is used by Target to target customers (by, for example, identifying pregnant women), by the Center for Disease Control to identify outbreaks, to predicted box office hits from Twitter, and by political campaigns to target the right voters with the right message. The article implies that Big Data algorithms identify patterns in the data which companies can exploit.

But then the article gets into application of Big Data to the hourly work force. Companies are focusing on improving worker retention, worker productivity, customer satisfaction, and average revenue per sale. Big Data is being used to increase each of these metrics five to twenty-five percent. As in baseball (Moneyball), some of the old rules of thumb like excluding “job hoppers” or even those with an old criminal history are being rejected by the data in favor of machine learning features like distance from work or the Meyers Briggs personality classification which are supported by the data.

This 2011 tutorial (Parts 1 and 2) is the best introduction to stochastic and adversarial bandits and details recent advances in research that I have seen. Many of the bandit papers that I have linked to in previous posts are quoted. Some experimental results and suggested parameter values are given. Part one mentions that regret may be much larger than the expected regret as per the paper “Exploration-exploitation tradeoff using variance estimates in multi-armed bandits” by Audibert, Munos, Szepesvári (2009). It describes the UCB-Horizon algorithm and Hoeffding-based GCL∗ policy which alleviate this problem. Part two is titled “Bandits with large sets of actions” and mostly deals with the case of infinitely many bandits. Many algorithms are described including Hierarchical Optimistic Optimization and a huge bibliography is provided at the end of the tutorial.

In “Knowledge-Based General Game Playing” Haufe, Michulke, Schiffel, and Thielscher (2011) discuss their general game playing program FLUXPLAYER. They summarize their article as

“This article gives an overview of five essential methods for knowledge-based General Game Playing:

1. We show how to automatically generate evaluation functions for non-terminal positions.

2. We demonstrate the application of machine learning

techniques to improve these evaluation functions.

3. We illustrate how a system can automatically derive new

properties of games using automated theorem proving.

4. We present two specific techniques that help to reduce the

complexity of the search space by mimicking the ability

of humans to

(a) detect symmetries in games and

(b) identify subgames in composite games.”

FLUXPLAYER creates a fuzzy logic position evaluation function based on the rules for “for terminal and goal states”, persistent makers (such as pieces), a “logic-to-neural-network transformation”, simulations, temporal difference learning, symmetry detection, factoring (“Given its mere rules, how can a previously unknown game be automatically decomposed into independent subgames?”), automated theorem proving, and the $C- IL^2P$ algorithm.

In “Future impact: Predicting scientific success“, Acuna, Allesina, and Kording predict the future h-index of scientist using their current h-index, the square root of the number of articles published, years since first publication, number of distinct journals, and the number of articles in top journals. They vary the coefficients of a linear regression with the number of years in the forecast and note that, in the short term the largest coefficient is (not surprisingly) the scientist’s current h-index, but in the long term, the number of articles in top journals and the number of distinct journals become more important for the 10 year h-index forecast. They achieve an $R^2$ value of 0.67 for neuro-scientists which is significantly larger than the $R^2$ using h-index alone (near 0.4).

Additionally, they provide an on-line tool you can use to make your own predictions.

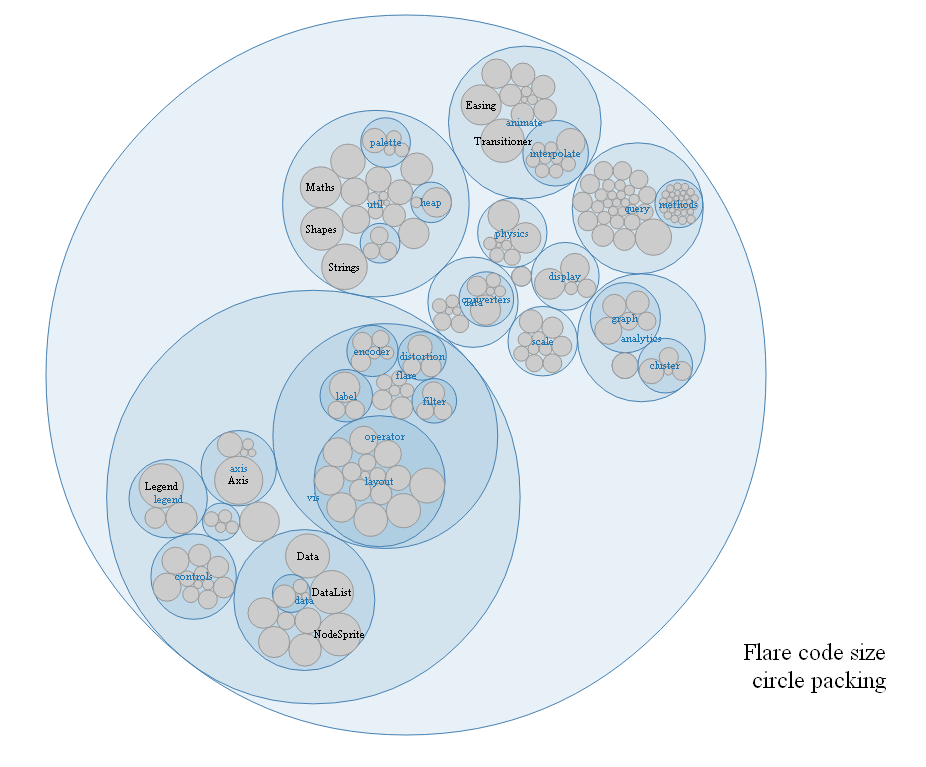

Another cool link from Carl. “D3.js is a JavaScript library for manipulating documents based on data. “

In the paper “Finite-time Analysis of the Multiarmed Bandit Problem“, Auer, Cesa-Bianchi, and Fisher (2002) prove that for the fixed reward distributions, “the optimal logarithmic regret is also achievable uniformly over time, with simple and efficient policies, and for all reward distributions with bounded support.”

The paper discusses the following three ideas:

1) The expected regret for the upper confidence bound algorithm UCB1 is bounded above by

$$\left[ 8 \sum_{i:\mu_i < \mu^*} \left({\log n \over {\mu^* - \mu_i}}\right)\right] + \left( 1 + {\pi^2\over 3}\right)\left(\sum_{j=1}^K (\mu^*-\mu_j) \right)$$

where $\mu_i$ is the mean of the $i$th distribution, $n$ is the number of pulls, and $K$ is the number of arms if the rewards are between 0 and 1.

2) They improve on the coefficient of $\log n$ with a slightly more complex algorithm UCB2.

3) The $\epsilon_n = 1/n$ greedy algorithm has $O(\log n)$ regret.

They conclude the paper with numerical results.

In “Regret Bounds for Sleeping Experts and Bandits” Kleinberg, Niculescu-Mizil, and Sharma (2008) propose an algorithm for selecting among a list of experts some of whom may be sleeping. At each time step $t$, the algorithm ranks the experts in order of preference with a permutation vector $\sigma(t)$. The first analyzed algorithm is Follow The Awake Leader (FTAL) for the case where all of the expert predictions and rewards are observed. Next they explore a natural extension of upper confidence bound algorithm for the multi-armed bandit setting. In the adversarial setting, they prove that the regret is at least $O(\sqrt{t\, K \log K})$ for $K$ bandits at time $t$.

“Mixture of Vector Experts” by Henderson, Shawe-Taylor, and Zerovnik (2005) describes an algorithm for mixing the predictions of several experts. Each expert gives at prediction vector in $\{0,1\}^n$ at time $t$. Their experimental evidence suggests that their algorithm outperforms a similar componentwise scalar mixture of experts.