In “Machine Learning Techniques—Reductions Between Prediction Quality Metrics” Beygelzimer, Langford, and Zadrozny (2009?) summarize a bunch of “techniques, called reductions, for converting a problem of minimizing one loss function into a problem of minimizing another, simpler loss function.” They give a simplified overview of machine learning algorithms and sampling methods relating them to error correcting codes and regret minimization.

Mathematician and Father. Into games, astronomy, psychology and philosophy.

The New York Time’s article “Skilled Work, Without the Worker” describe how robots in places like the Netherlands and California are starting to displace Chinese factories filled with low skilled workers. They write

The falling costs and growing sophistication of robots have touched off a renewed debate among economists and technologists over how quickly jobs will be lost. This year, Erik Brynjolfsson and Andrew McAfee, economists at the Massachusetts Institute of Technology, made the case for a rapid transformation. “The pace and scale of this encroachment into human skills is relatively recent and has profound economic implications,” they wrote in their book, “Race Against the Machine.”

In a review of “Race Against the Machine”, Bill Jarvis writes

In “Race Against the Machine”, economists Erik Brynjolfsson and Andrew McAfee ask the question: Could technology be destroying jobs? They then expand on that to explore whether advancing information technology might be an important contributor to the current unemployment disaster. The authors argue very convincingly that the answer to both questions is YES.

which reminds me of Robin Hanson’s paper “Economic Growth Given Machine Intelligence”.

I’ve been looking at versions of Adaboost that are less sensitive to noise such as Softboost. Softboost works by ignoring a number of outliers set by the user (the parameter $v$), finding weak learners that are not highly correlated with the weak learners already in the boosted learner mix and updating the distribution by KL projection onto the set of distributions restricted to those uncorrelated to the mistakes of the latest learner and not placing too much weight on any particular data point. Softboost avoids over fitting by stopping when no feasible point is found for the KL projection.

In “Soft Margins for AdaBoost”, Ratsch, Onoda, and Muller, generalize Adaboost by adding a softening parameter $\phi$ to the distribution update step. They relate soft boosting to simulated annealing and minimization of a generalized exponential loss function. The paper has numerous helpful graphs and experimental data.

Machine Learning Links from Google and http://www.cs.waikato.ac.nz/~bernhard/good-machine-learning-blogs.html

Long, Informative Articles

http://www.swkorridor.dk/en/blogs/machine_learning_applications/

Computer Vision, Image Processing Blog

http://quantombone.blogspot.com/

Causality Blog

http://www.mii.ucla.edu/causality/

Stack Exchange for Statistics

http://stats.stackexchange.com/

Machine Learning News Google Group

https://groups.google.com/forum/?fromgroups#!forum/ml-news

MetaOptimize Stack Exchange

http://metaoptimize.com/qa/

Reddit Machine Learning

http://www.reddit.com/r/machinelearning

Stack Overflow Datamining

http://stackoverflow.com/questions/tagged/data-mining

Stack Overflow Machine Learning

http://stackoverflow.com/questions/tagged/machine-learning

MLoss

http://mloss.org/community/

Software, Machine Learning, Science and Math

Julia language http://julialang.org/blog/

Alexandre Passos’ research blog

http://atpassos.posterous.com/

Real Commentary on Real Machine Learning Techniques & Papers

Anand Sarwate

http://ergodicity.net/

Frequent, Varied articles including ML

Peekaboo Andy’s Computer Vision and Machine Learning Blog

http://peekaboo-vision.blogspot.com/

Andrew Eckford: The Blog

http://andreweckford.blogspot.com/

Lots of notes about conferences

Andrew Rosenberg

http://spokenlanguageprocessing.blogspot.com/

Great Material on NLP and ML

Freakonomics

http://freakonometrics.blog.free.fr/index.php/

Read for fun

Brian Chesney

http://bpchesney.org/

Informative, Numer Analysis, Optimization, ML

Daniel Lemire’s blog

http://lemire.me/blog/

Interesting Thoughts on Science, Software, and Global Warming

Frank Nielsen: Computational Information Geometry Wonderland

http://blog.informationgeometry.org/index.php

Blog on Information Theory, Image Processing, Statistics, …

Igor Carron’s Nuit Blanche

http://nuit-blanche.blogspot.com/

Great blog on Statistics, Modelling Dynamic Systems, Data Mining, Compressive Sensing, Signal Processing, …

Jonathan Manton’s Blog

http://jmanton.wordpress.com/

A mathematician writes numerous in-depth posts on Numerical Analysis, Software, Probability, Teaching, …

Jürgen Schmidhuber’s Home Page

http://www.idsia.ch/~juergen/

Not a blog, but it is a good resource

Paul Mineiro: Machined Learnings

http://www.machinedlearnings.com/

Many posts

Radford Neal’s Blog

http://radfordneal.wordpress.com/

Theory of Statstics and Information Theory

Rob Hyndman: Research tips

http://robjhyndman.com/researchtips/

Forecasting

Rod Carvalho: Stochastix

http://stochastix.wordpress.com/

Math, Probability, Haskell, Numerical Methods,

Roman Shapovalov: Computer Blindness

http://computerblindness.blogspot.com/

Graphical Models, Learning Theory, Computer Vision, ML

Shubhendu Trivedi: Onionesque Reality

http://onionesquereality.wordpress.com/

Personal Blog, Abstract Ideas, Math, ML, …

Suresh: The Geomblog

http://geomblog.blogspot.com/

Teaching, DataMining, ML, Geometry, Computational Geometry

Roman Shapovalov: Computer Blindness

http://computerblindness.blogspot.com/

Graphical Models, Learning Theory, Computer Vision, ML

Sami Badawi: Hadoop comparison

http://blog.samibadawi.com/

Computer Languages, AI, NLP

Shubhendu Trivedi: Onionesque Reality

http://onionesquereality.wordpress.com/

Personal Blog, Abstract Ideas, Math, ML, …

Suresh: The Geomblog

http://geomblog.blogspot.com/

Teaching, DataMining, ML, Geometry, Computational Geometry

Terran Lane: Ars Experientia

http://cs.unm.edu/~terran/academic_blog/?m=201201

ML, Teaching

Terry Tao’s Blog

http://terrytao.wordpress.com/

Anything mathematical — deep

Walkingrandomly.com has a post on the Julia computer language which seems to be getting faster. Carl and I have stumbled across many languages in our efforts to get a fast version of lisp with good debugging tools. For a while we thought at one time that Haskell was the answer, but it now seems we are leaning more toward Clojure and Python recently. It is hard to pass up languages that can use tools like WEKA, SciPy, and Numpy. Another option is R, but only one of my friends uses R. Clojure is neat because it runs on the JVM and Javascript (and is being targeted at other languages such as Python, Scheme, and C).

For a speed comparison with Matlab, see this post.

I looked through the some machine learning blogs, most of which are listed here, and this is what I found:

Machine Learning Theory – Widely read

http://hunch.net/

Edwin Chen’s blog – Great Visualization, Great Posts

http://blog.echen.me/blog/archives/

Maxim Raginsky’s The Information Structuralist – Some Econ, Some AI

http://infostructuralist.wordpress.com/

Brendan O’Connor’s AI and Social Science blog – Econ, AI, great graphics, fun posts

http://brenocon.com/blog/

Matthew Hurst’s Data Mining: Text Mining, Visualization and Social Media: – Lots of post and images

http://datamining.typepad.com/data_mining/2012/07/index.html

Mikio Braun’s Marginally Interesting MACHINE LEARNING, COMPUTER SCIENCE, JAZZ, AND ALL THAT

http://blog.mikiobraun.de/

Bob Carpenter’s LingPipe Blog Natural Language Processing and Text Analytics – Full of interesting stuff

http://lingpipe-blog.com/

Computer Languages, Matlab, Software, Cool Miscalaneous

http://www.walkingrandomly.com/

Sports Predictions, Lots of other cool ML stuff

http://blog.smellthedata.com/

A personal blog with several AI related posts

http://mybiasedcoin.blogspot.com/

Personal blog with many technical post (some AI)

http://www.daniel-lemire.com/blog/

Three or fewer posts this year:

http://mark.reid.name/iem/

http://blog.smola.org/

http://yaroslavvb.blogspot.com/

http://www.inherentuncertainty.org/

http://justindomke.wordpress.com/

http://nlpers.blogspot.com/

http://www.vetta.org/

http://aicoder.blogspot.com/

http://earningmyturns.blogspot.com/

http://yyue.blogspot.com/

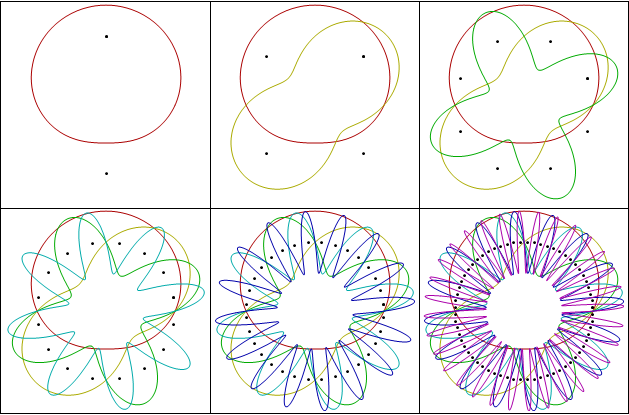

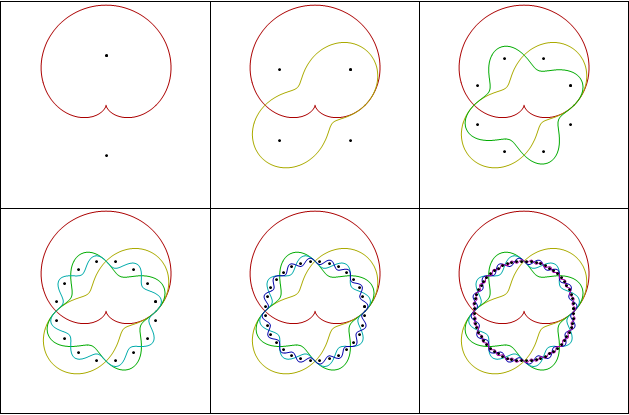

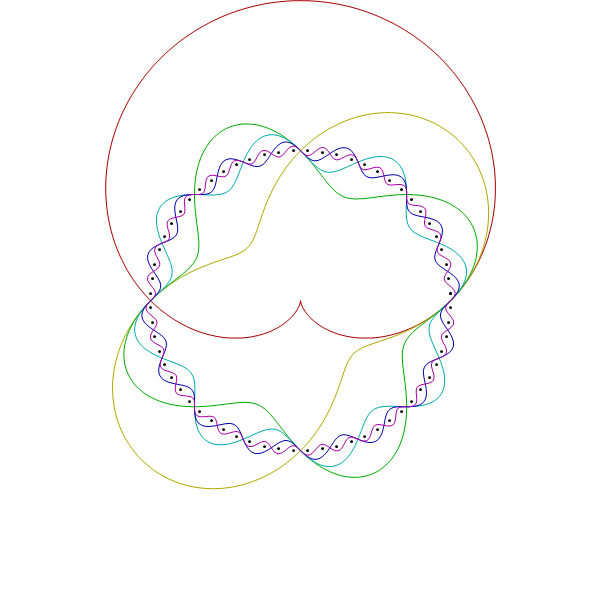

Carl sent me a link to a Venn diagrams post, so that got me thinking. A Venn Diagram with $n$ atoms has to represent $2^n$ regions. For example if $n$ is $2$, then you have the standard Venn diagram below.

Each time you increase $n$ by one, you double the number of regions. This makes me think of binary codes and orthogonal functions. Everybody’s favorite orthogonal functions are the trig functions, so you should be able to draw Venn diagrams with wavy trig functions. Here was my first try.

Those seemed kind of busy, so I dampened the amplitude on the high frequencies (making the slopes that same and possibly increasing artistic appeal.)

I really like the last one.

So I decided to look at other AI blogs and I began by typing “artificial intelligence blog” into Google. (That might not be the best way to find AI blogs, but it seemed like a good place to start.) Most of the links were popular blogs with an article on AI (like the Economist commenting on crossword AI). Here are some of the other links I came across:

A Great List of AI resources

http://www.airesources.info/

Blog – Lisp – 3 posts this year

http://p-cos.blogspot.com/

IEEE on AI

http://spectrum.ieee.org/robotics/artificial-intelligence/

AliceBot

http://alicebot.blogspot.com/

Robotics

http://www.robotcompanions.eu/blog/

Two posts this year – One of the posts is very good.

http://artificialintelligence-notes.blogspot.com/

Good ML blog

http://hunch.net/

This blog markets “Drools” software.

http://blog.athico.com/

Great response to a question about resources for AI.

http://stackoverflow.com/questions/821204/best-books-blogs-link-reading-about-ai-and-machine-learning

Social Cognition and AI

http://artificial-socialcognition.blogspot.com/

AI applied to the stock market

http://thekairosaiproject.blogspot.com/

A huge numbers of videos from PyCon 2012 Us are available at pyvideo.org. Marcel Caraciolo at aimotion.blogspot.com posted links to 17 of them on his blog. I have been avoiding Python for machine learning, but maybe I’ve been wrong.

In “How to Use Expert Advice“, Cesa-Bianchi, Freund, Haussler, Helmbold, Sharpire, and Warmuth (1997) describe boosting type algorithms to combine classifiers. They prove a bound of

$$ \sqrt{n \log(M)}$$

on the total regret where $n$ is the number of data points and $M$ is the number of classifiers. They relate their bounds to the L1 distance between binary strings and apply their results to pattern recognition theory and PAC learning.