A friend of mine asked:

“Take $n$ samples from a standard normal distribution $N(0,1)$. Which has greater variance: the average or the median?”

(Aug 12, 2013 edit: If you want to understand the answer to this question, read below. If you want to compute the exact standard deviation of the median for a particular sample distribution, click “The Exact Standard Deviation of the Sample Median“.)

This question was mostly answered by Laplace and by Kenney and Keeping.

If the number of samples $n$ of a symmetric continuous distribution with probability density function $f$ is large, then the distribution of the sample median is approximately $N(\overline{x}, \sigma)$, where $\overline{x}$ is the the mean of $f$, and

$$\sigma = {1 \over 2\sqrt{n}\,f(\overline{x})}.$$

The $1 \over 2\sqrt{n}$ comes from the fact that the number of samples above the mean has mean $n/2$ with standard deviation $1 \over 2\sqrt{n}$.

For the Gaussian distribution, the estimate for the standard deviation of the sample median is

$${\sqrt{2\pi} \over 2\sqrt{n}} = \sqrt{\pi \over 2n}.$$

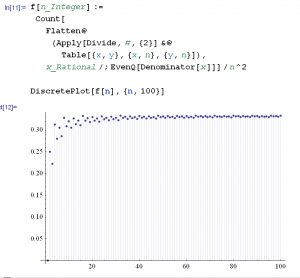

The standard deviation of the sample mean is $1 / \sqrt{n}$. So the standard deviation of the sample median is larger by a factor of $\sqrt{\pi / 2} \approx 1.25$ (i.e., it has about 25% more error). This is a small price to play if outliers are possible.

So we have answered the question for the Gaussian. What about any symmetric continuous single mode distribution? The standard deviation of the median decreases as the density of the distribution at the median increases, so for sharply pointed distributions like the symmetric Laplacian distribution the standard deviation of the sample median is actually lower than the standard deviation of the sample mean.

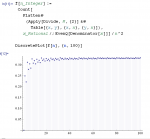

I used approximations above, but with a lot more work and Beta distributions, you can calculate to arbitrary precision values for the standard deviation of the sample median.