Hinton has a new Google tech talk “Brains, Sex, and Machine Learning“. I think that if you are into neural nets, you’ve got to watch this video. Here’s the abstract.

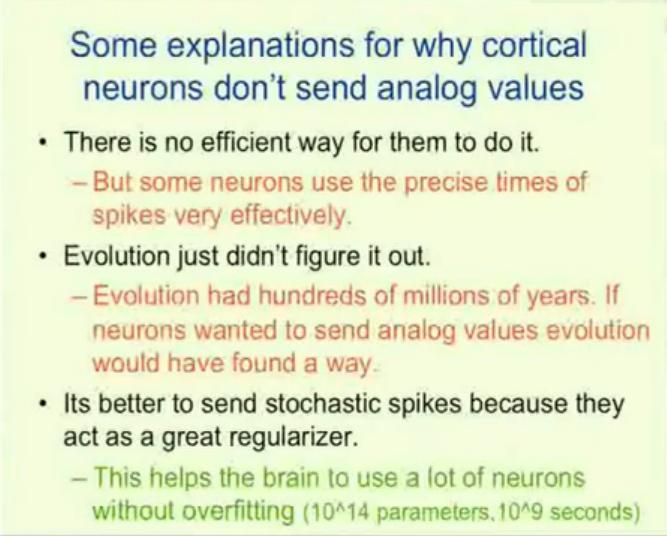

Recent advances in machine learning cast new light on two puzzling biological phenomena. Neurons can use the precise time of a spike to communicate a real value very accurately, but it appears that cortical neurons do not do this. Instead they send single, randomly timed spikes. This seems like a clumsy way to perform signal processing, but a recent advance in machine learning shows that sending stochastic spikes actually works better than sending precise real numbers for the kind of signal processing that the brain needs to do. A closely related advance in machine learning provides strong support for a recently proposed theory of the function of sexual reproduction. Sexual reproduction breaks up large sets of co-adapted genes and this seems like a bad way to improve fitness. However, it is a very good way to make organisms robust to changes in their environment because it forces important functions to be achieved redundantly by multiple small sets of genes and some of these sets may still work when the environment changes. For artificial neural networks, complex co-adaptations between learned feature detectors give good performance on training data but not on new test data. Complex co-adaptations can be reduced by randomly omitting each feature detector with a probability of a half for each training case. This random “dropout” makes the network perform worse on the training data but the number of errors on the test data is typically decreased by about 10%. Nitish Srivastava, Alex Krizhevsky, Ilya Sutskever and Ruslan Salakhutdinov have shown that this leads to large improvements in speech recognition and object recognition.

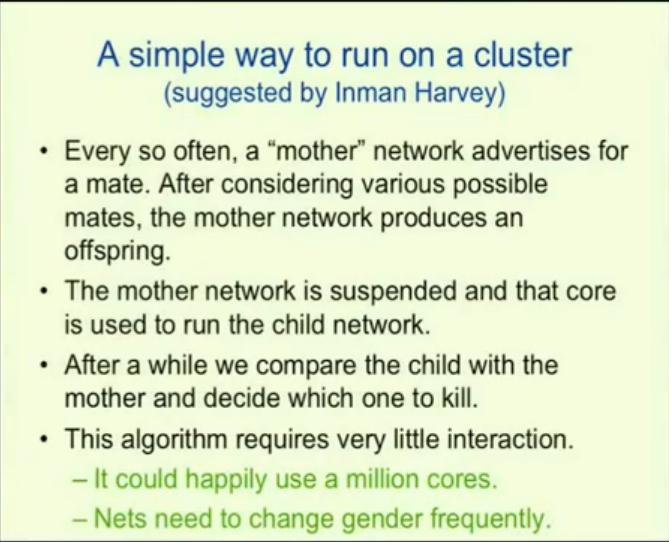

Hinton has a lot of great ideas in this video including this slide on a massively parallel approach to neural nets.

And this one

And, as mentioned in the abstract, the idea of “dropouts” is very important. (Similar to denoising.)

I wonder if the idea of dropouts can be applied to create more robust Bayesian networks / Probabilistic Graphical Models. Maybe the same effect can be achieved by introducing a bias (regularization) against connections between edges (similar to the idea of sparsity).