Check out the Nuit Blanche posts “Predicting the Future: The Steamrollers” and “Predicting the Future: Randomness and Parsimony” where Igor Carron repeats the well known mantra of Moore’s law that always seems to catch us by surprise. Carron’s remarks on medicine surprised me but also I thought, “I should have guessed that would happen” while reading the articles.

You are currently browsing the archive for the Uncategorized category.

At the top 500 website, I notice that the main CPUs are made only by four companies: IBM, Intel, AMD, and Nvidia. HP was squeezed out in 2008, leaving only four players. It makes me wonder if the trend toward fewer manufacturers will continue. Also, the both the #1 super computer and #500 did not keep up with the general trendline over the last two or three years. On the other hand, the average computational power of the top 500 has stayed very close to the trendline which increases by a factor of 1.8 every year.

I just had to pass along this link from jwz’s blog.

If you want hits on your blog, write about an article that is being read by thousands or millions of people. Some of those readers will Google terms from the article. Today I blogged very briefly about the NY Times article “Scientists See Promise in Deep-Learning Programs” and I was surprised at the number of referrals from Google. Hmm, maybe I should blog about current TV shows (Big Bang? Mythbusters?), movies (Cloud Atlas?), and video games (Call of Duty?). Carl suggested that I apply deep learning, support vector machines, and logistic regression to estimate the number of hits I will get on a post. If I used restricted Boltzmann machines, I could run it in generative mode to create articles ![]() I was afraid if I went down that route I would eventually have a fantastically popular blog about Britney Spears.

I was afraid if I went down that route I would eventually have a fantastically popular blog about Britney Spears.

I was reading “Around the Blogs in 80 Summer Hours” at Nuit Blanche and these two links caught my eye:

Implementation: BiLinear Modelling Via Augmented Lagrange Multipliers (BLAM)

In “Hashing Algorithms for Large-Scale Learning” Li, Shrivastava, Moore, and Konig (2011) modify Minwise hashing by storing only the $b$ least significant bits. They use the $b$ bits from $k$ hashing functions as features for training a support vector machine and logistic regression classifiers. They compare their results against Count-Min, Vowpal Wabbit, and Random hashes on a large spam database. Their algorithm compares favorably against the others.

I came across this video from NIPS 2011 titled “Big Learning – Algorithms, Systems, & Tools Workshop: Vowpal Wabbit Tutorial”. Vowpal Wabbit is a fast machine learning toolbox for large data sets which uses stochastic gradient descent (i.e. perceptron algorithm), L-BFGS, and other ML algorithms. Another video from NIPS 2011 describes spark, another tool for big data machine learning.

The article “Big Data and the Hourly Workforce” (see Slashdot comments) repeats the known story that Big Data is being used by companies to improve their product. It is used by Target to target customers (by, for example, identifying pregnant women), by the Center for Disease Control to identify outbreaks, to predicted box office hits from Twitter, and by political campaigns to target the right voters with the right message. The article implies that Big Data algorithms identify patterns in the data which companies can exploit.

But then the article gets into application of Big Data to the hourly work force. Companies are focusing on improving worker retention, worker productivity, customer satisfaction, and average revenue per sale. Big Data is being used to increase each of these metrics five to twenty-five percent. As in baseball (Moneyball), some of the old rules of thumb like excluding “job hoppers” or even those with an old criminal history are being rejected by the data in favor of machine learning features like distance from work or the Meyers Briggs personality classification which are supported by the data.

In “Future impact: Predicting scientific success“, Acuna, Allesina, and Kording predict the future h-index of scientist using their current h-index, the square root of the number of articles published, years since first publication, number of distinct journals, and the number of articles in top journals. They vary the coefficients of a linear regression with the number of years in the forecast and note that, in the short term the largest coefficient is (not surprisingly) the scientist’s current h-index, but in the long term, the number of articles in top journals and the number of distinct journals become more important for the 10 year h-index forecast. They achieve an $R^2$ value of 0.67 for neuro-scientists which is significantly larger than the $R^2$ using h-index alone (near 0.4).

Additionally, they provide an on-line tool you can use to make your own predictions.

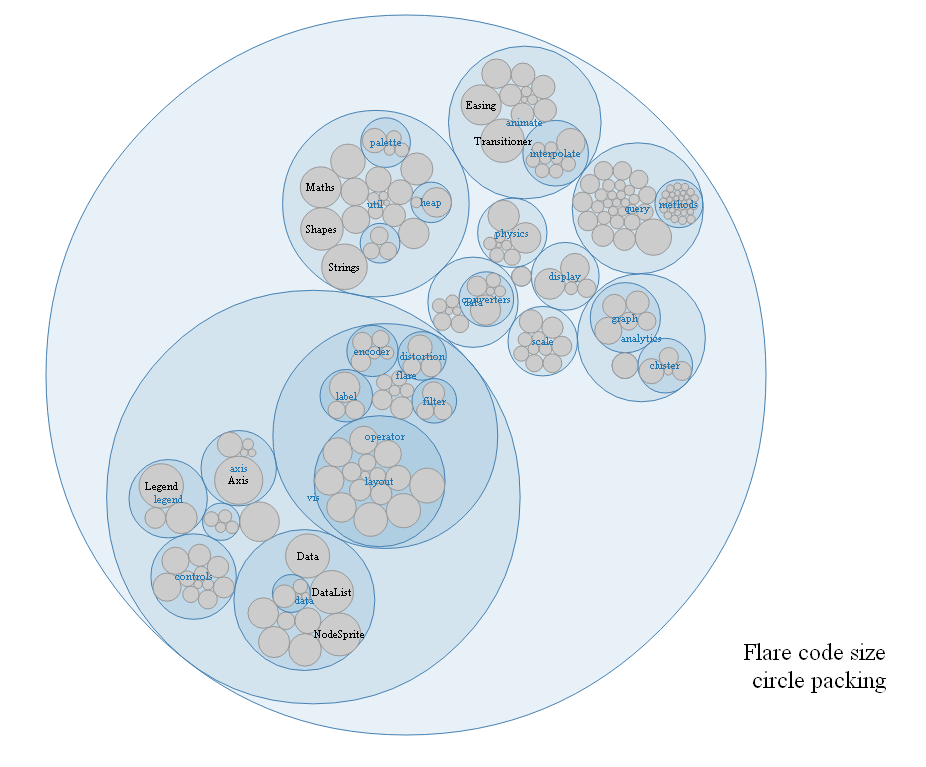

Another cool link from Carl. “D3.js is a JavaScript library for manipulating documents based on data. “