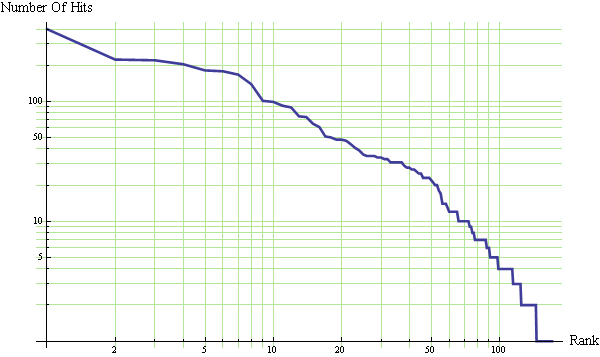

As I look at the hit statistics for the last quarter, I cannot help but wonder how well they fit Zipf’s law (a.k.a. Power Laws, Zipf–Mandelbrot law, discrete Pareto distribution). Zipf’s law states that the distribution of many ranked things like city populations, country populations, blog hits, word frequency distribution, probability distribution of questions for Alicebot, Wikipedia Hits, terrorist attacks, the response time of famous scientists, … look like a line when plotted on a log-log diagram. So here are the numbers for my blog hits and, below that, a plot of log(blog hits) vs log(rank) :

Not too linear. Hmmm.

(Though Zipf’s “law” has been known for a long time, this post is at least partly inspired by Tarence Tao’s wonderful post “Benford’s law, Zipf’s law, and the Pareto distribution“.)