I will be continuing to post articles on AI for the game 2048. The first two articles were:

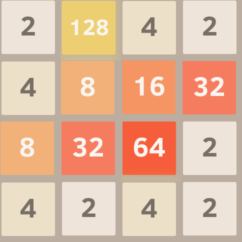

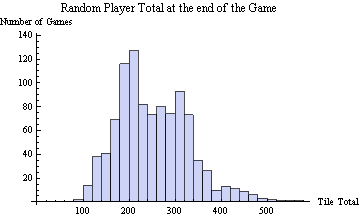

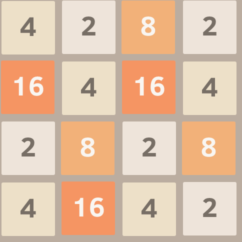

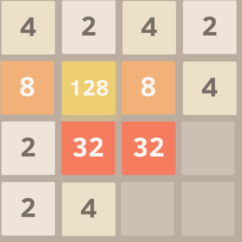

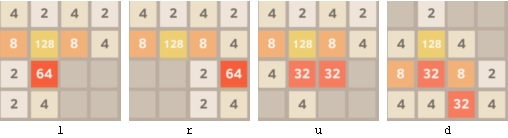

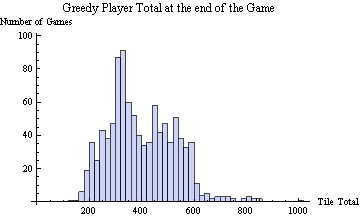

- An AI for 2048 — Part 1 – which described the game 2048 and reported the results of pure random play and a very simple greedy strategy that just tried to maximize it’s score on every move.

- An AI for 2048 — Part 2 Cyclic Blind Strategies – which reported on strategies that just cyclically repeated the same key stokes.

PROGRAMMING

In this article, I will make some comments on the cyclic blind strategies and report on random blind strategies. The cyclic results can be generated from the Mathematica source code that I posted earlier. The Biased Random results below can be obtained by just modifying the strategy function in the Mathematica source code.

CYCLIC BLIND

In the Cyclic Blind Article, we saw that left-down-right-down had the highest performance of any length 4 or less cyclic strategy with an average end tile total (AETT) of about 680. The other cyclic strategies fell into the following seven groupings:

- All four directions alternating vertical and horizontal (dlur, drul) 420 AETT.

- All four directions without alternating vertical and horizontal (durl, dulr, dlru, …) 270 AETT.

- Three directions with one direction repeated twice in a row where the directions that were not repeated were opposite (durr, dllu, dull, drru, …) 390 AETT

- Three directions with one direction repeated twice in a row where the directions that were not repeated were perpendicular (drrl, duur, ddlu, …) 330 AETT

- Three directions with one direction repeated, but not twice in a row, and the directions that were not repeated were perpendicular (dudr, duru, dldu …) 295 AETT

- Three directions with no repetition (dlu, dur, …) 360 AETT

- One Horizontal Direction + One vertical direction with or without repetition 60 AETT.

BLIND RANDOM BIASED STRATEGIES

The other blind strategy to consider is independent random biased selection of directions. For example, someone might try rolling a six sided die and going left on 1, right on a 2 and down for rolls of 3, 4, 5, or 6. I ran 1000 games each for every possible biased random strategy where the strategy was of the form

left with probability L/T, right with probability R/T, up with probability U/T, down with probability D/T,

where T was the total of L, R, U, and D, and L, R, U and D between 0 and 4.

The results are tabulated below

AETT MAX

TILE L/T R/T U/T D/T

11.138 16 1. 0. 0. 0.

55.416 128 0.57 0. 0.43 0.

56.33 128 0.67 0. 0.33 0.

57.17 64 0.5 0. 0.5 0.

57.732 128 0.6 0. 0.4 0.

70.93 16 0.6 0.4 0. 0.

71.246 32 0.57 0.43 0. 0.

71.294 32 0.5 0.5 0. 0.

71.752 32 0.67 0.33 0. 0.

234.42 256 0.4 0.4 0.1 0.1

238.182 256 0.38 0.38 0.12 0.12

241.686 256 0.44 0.33 0.11 0.11

245.49 256 0.44 0.44 0.11 0.

246.148 256 0.4 0.3 0.2 0.1

247.046 256 0.5 0.25 0.12 0.12

247.664 256 0.36 0.36 0.18 0.09

250.372 256 0.43 0.29 0.14 0.14

253.09 256 0.44 0.22 0.22 0.11

254.002 512 0.5 0.38 0.12 0.

255.178 256 0.33 0.33 0.22 0.11

255.242 256 0.33 0.33 0.17 0.17

255.752 256 0.33 0.33 0.25 0.08

257.468 512 0.43 0.43 0.14 0.

258.662 512 0.36 0.27 0.27 0.09

258.832 256 0.57 0.29 0.14 0.

258.9 256 0.36 0.27 0.18 0.18

260.052 256 0.3 0.3 0.2 0.2

261.676 256 0.38 0.25 0.25 0.12

261.902 256 0.31 0.31 0.23 0.15

262.414 256 0.27 0.27 0.27 0.18

263.702 256 0.27 0.27 0.27 0.2

264.034 256 0.31 0.23 0.23 0.23

264.17 512 0.57 0.14 0.14 0.14

264.2 256 0.29 0.29 0.29 0.14

264.226 256 0.31 0.23 0.31 0.15

264.232 256 0.29 0.29 0.21 0.21

264.33 256 0.5 0.17 0.17 0.17

265.328 256 0.33 0.22 0.22 0.22

265.512 256 0.4 0.2 0.3 0.1

265.724 256 0.4 0.2 0.2 0.2

265.918 512 0.33 0.25 0.25 0.17

265.986 256 0.3 0.3 0.3 0.1

266.61 256 0.25 0.25 0.25 0.25

266.796 256 0.29 0.21 0.29 0.21

266.812 256 0.31 0.31 0.31 0.08

267.096 256 0.33 0.17 0.33 0.17

267.5 512 0.33 0.25 0.33 0.08

267.804 256 0.36 0.18 0.27 0.18

267.99 256 0.3 0.2 0.3 0.2

268.414 512 0.43 0.14 0.29 0.14

268.634 256 0.44 0.11 0.33 0.11

271.202 256 0.38 0.12 0.38 0.12

271.646 256 0.33 0.22 0.33 0.11

271.676 512 0.5 0.33 0.17 0.

272.128 256 0.36 0.18 0.36 0.09

273.632 256 0.5 0.12 0.25 0.12

279.82 256 0.4 0.1 0.4 0.1

281.958 256 0.4 0.4 0.2 0.

291.702 512 0.44 0.33 0.22 0.

306.404 256 0.38 0.38 0.25 0.

306.508 512 0.5 0.25 0.25 0.

310.588 512 0.43 0.29 0.29 0.

310.596 512 0.36 0.36 0.27 0.

321.016 512 0.4 0.3 0.3 0.

333.788 512 0.33 0.33 0.33 0.

340.574 512 0.5 0.17 0.33 0.

341.238 512 0.57 0.14 0.29 0.

342.318 512 0.44 0.22 0.33 0.

347.778 512 0.36 0.27 0.36 0.

355.194 512 0.38 0.25 0.38 0.

366.72 512 0.5 0.12 0.38 0.

369.35 512 0.43 0.14 0.43 0.

371.63 512 0.4 0.2 0.4 0.

384.962 512 0.44 0.11 0.44 0.

There are a few things you might notice.

- The best strategy was 44% Left, 11% Right, and 44% down with a AETT of 385 which was much better than pure random (25% in each direction), but not nearly as good as the cyclic strategy left-down-right-down (AETT 686).

- The AETT varies smoothly with small changes in the strategy. This is not surprising. You would think that 55% up, 45% down would have nearly the same result as 56% up and 44% down. The Extreme value theorem then tells us that there must be a best possible random biased strategy.

- The top 17 strategies in the table prohibit one direction with AETTs of 280 to 385.

- If the strategy uses just two directions, then it does very poorly.

Well that’s it for blind strategies. Over the next few posts, we will introduce evaluation functions, null moves, quiescent search, alpha-beta prunning, and expectimax search.